Filebeat二进制安装与启动

Filebeat的安装

Filebeat的二进制安装

tar -zxf filebeat-6.6.0-linux-x86_64.tar.gz

mv filebeat-6.6.0-linux-x86_64 /usr/local/filebeat-6.6.0

Filebeat直接发送日志到ES配置/usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.log

output:

elasticsearch:

hosts: ["192.168.1.10:9200"]

- 前台启动: /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml

- 后台启动:nohup /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml >/tmp/filebeat.log 2>&1 &

Filebeat+Logstash新架构

Filebeat和Logstash说明

- Logstash -> Elasticsearch -> Kibana

- Filebeat -> Elasticsearch -> Kibana

- Filebeat -> Logstash -> Elasticsearch -> Kibana

Filebeat配置发往Logstash:/usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.log

output:

logstash:

hosts: ["192.168.1.11:5044"]

Logstash配置监听在5044端口,接收Filebeat发送过来的日志 /usr/local/logstash-6.6.0/config/logstash.conf

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

kill进程重载配置:nohup /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf >/tmp/logstash.log 2>/tmp/logstash.log &

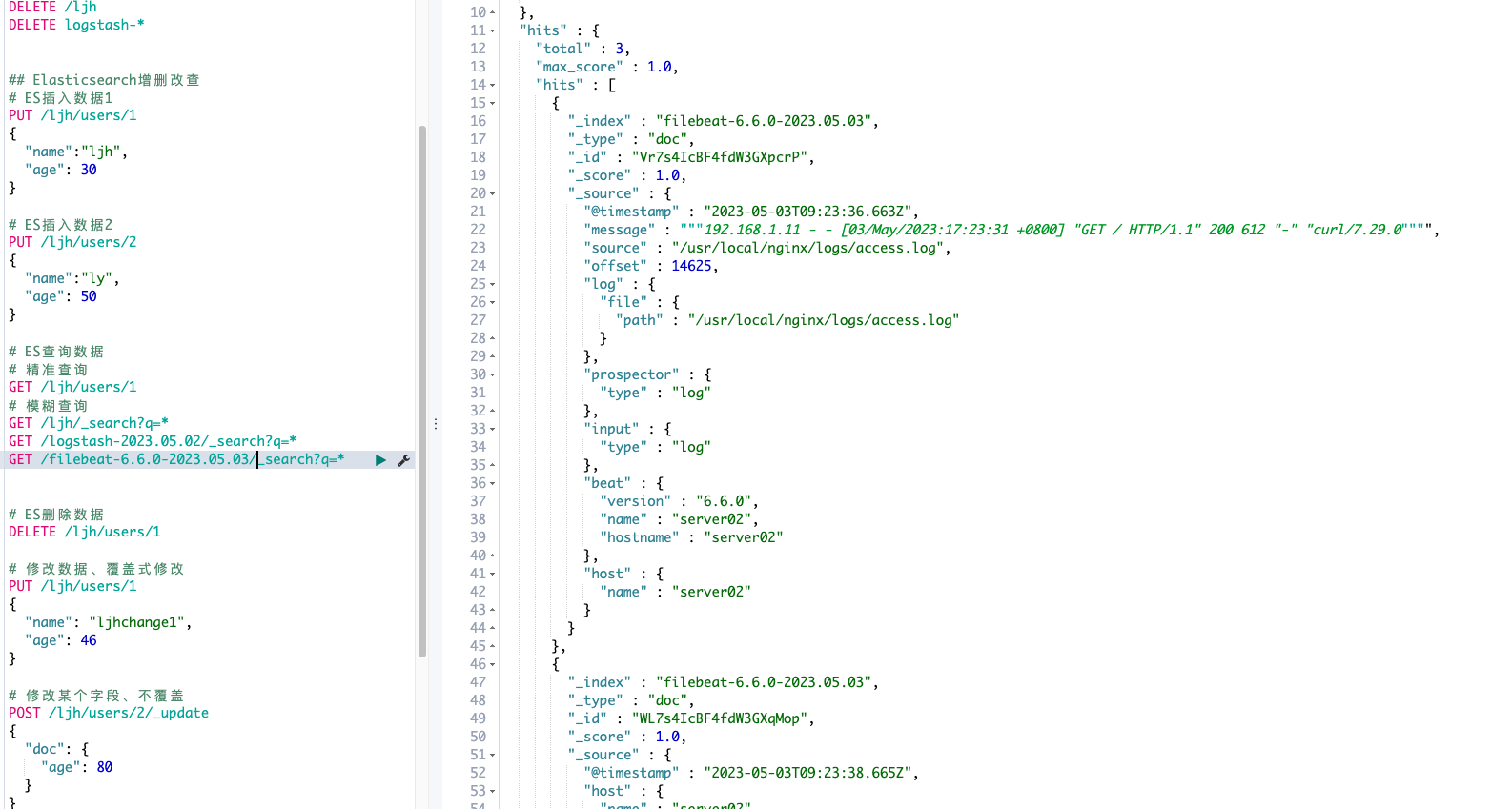

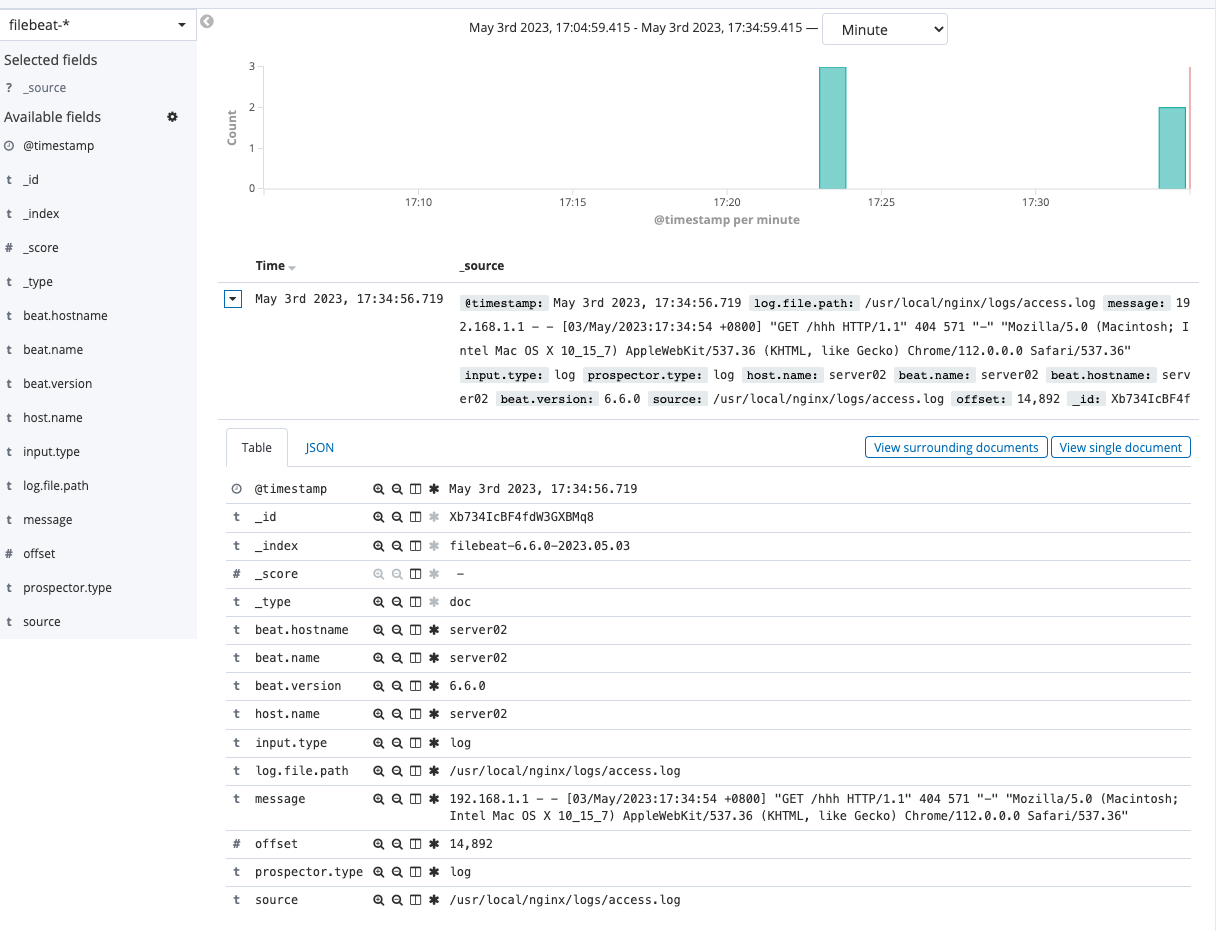

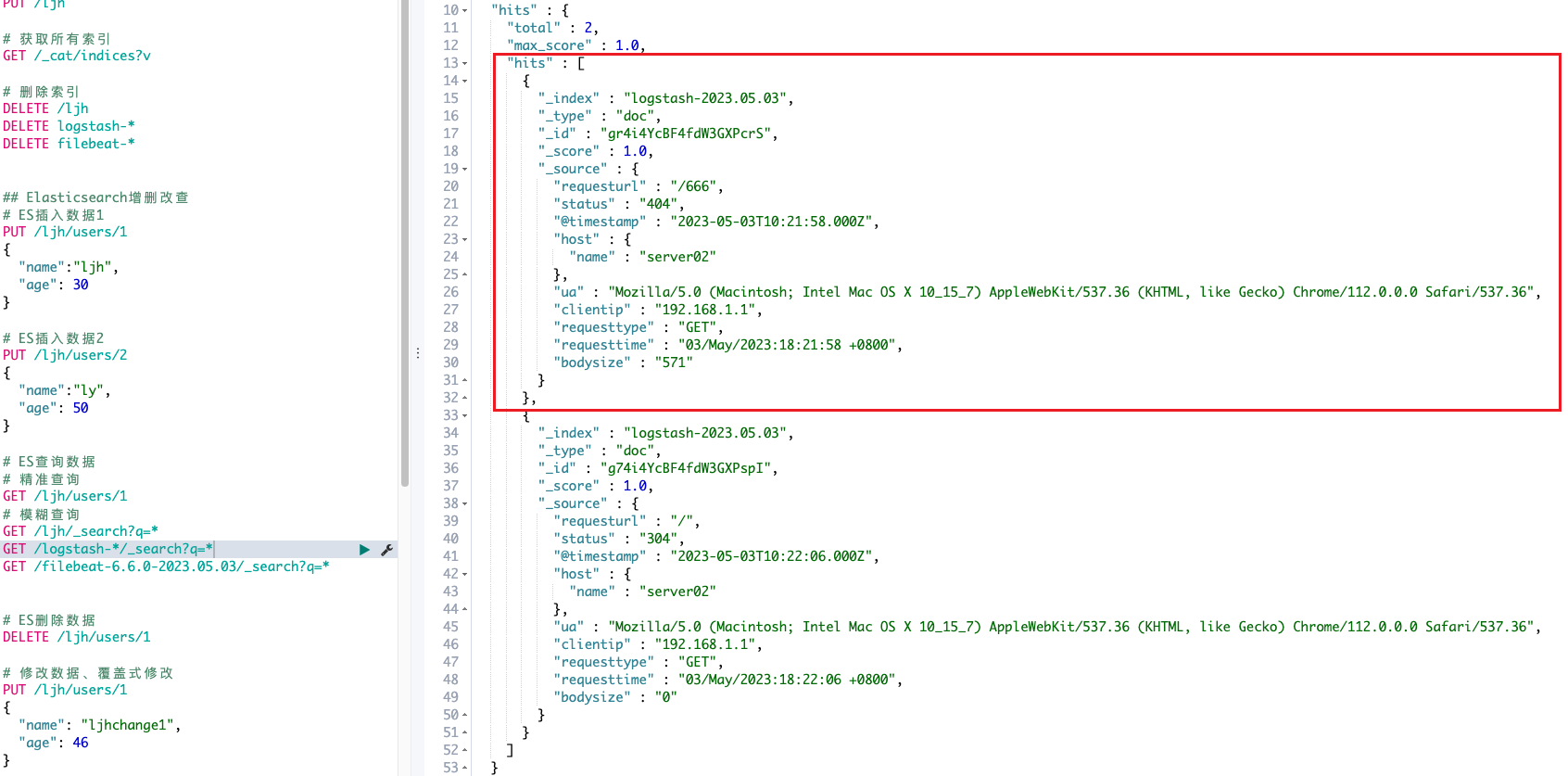

访问nginx页面

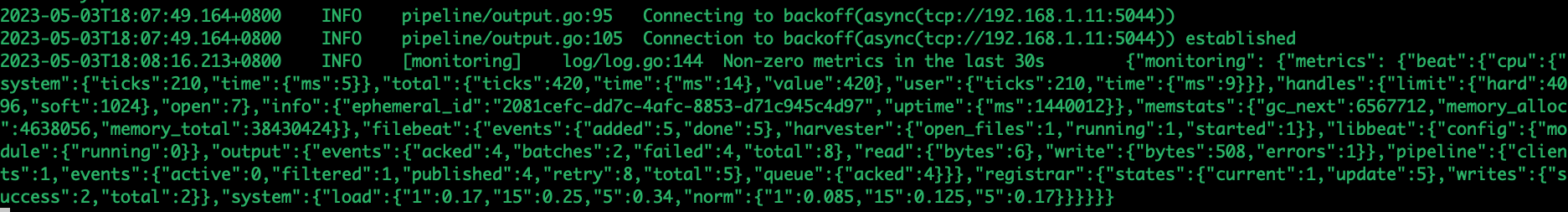

filebeat日志查看

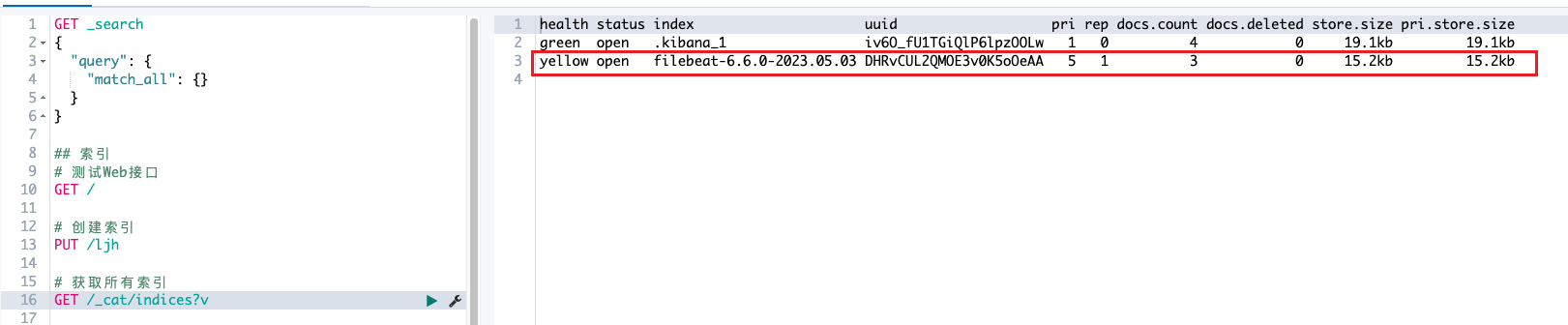

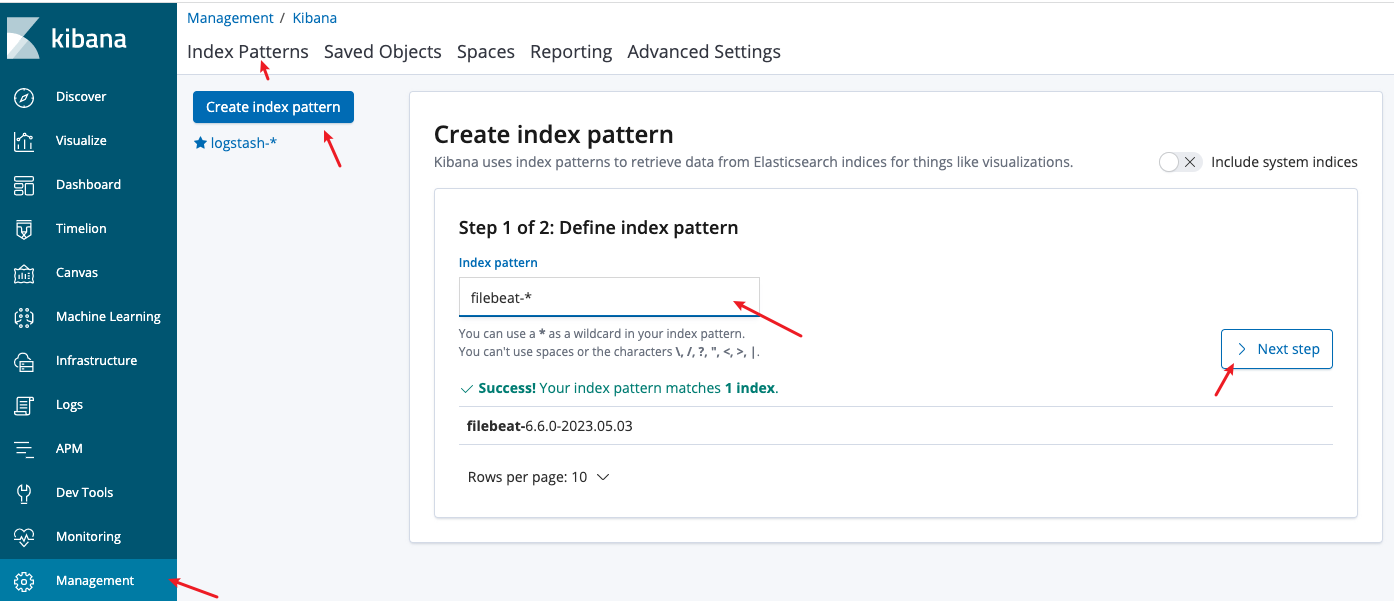

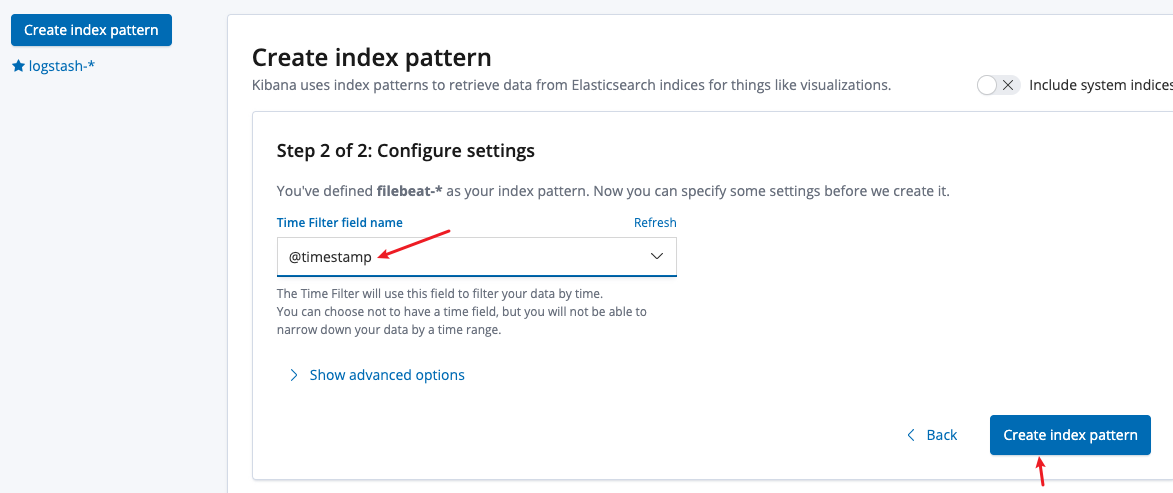

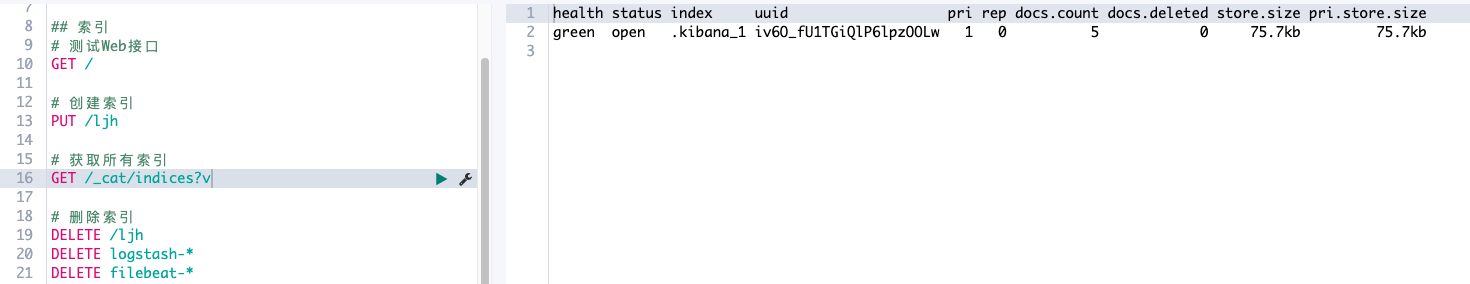

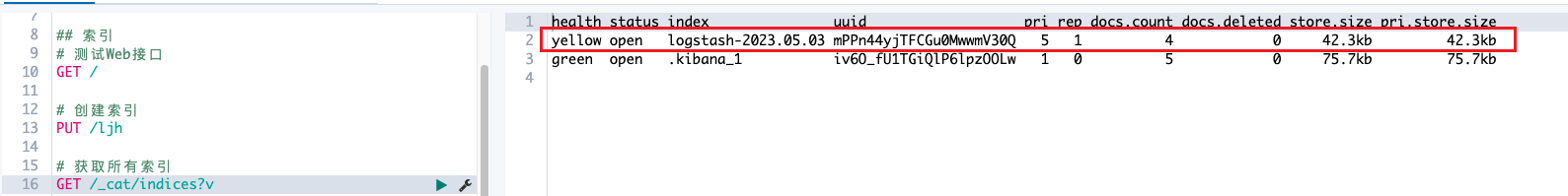

自动生成新索引

- Filebeat发过来的无用字段比较多

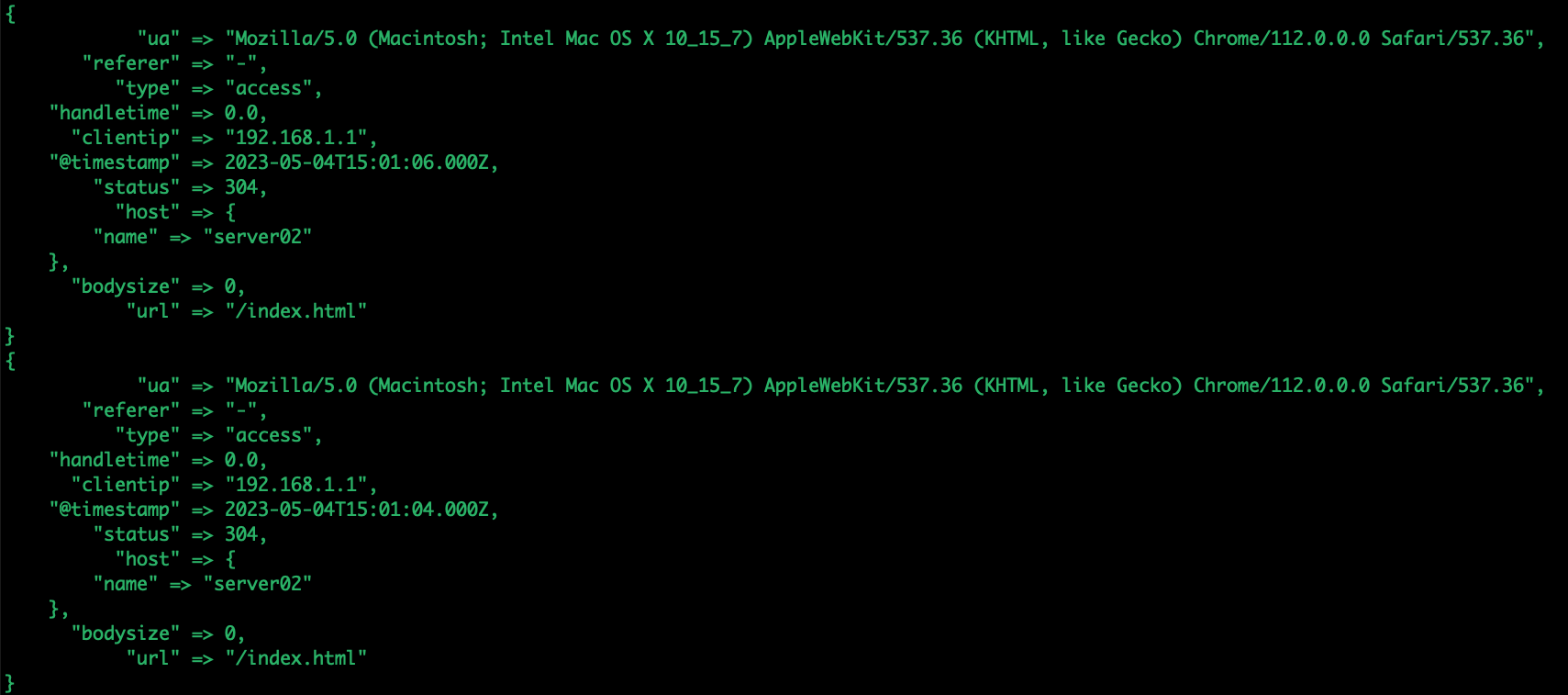

- remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

删除相应字段后的展示结果:

Filebeat(多台) -> Logstash(正则) -> Elasticsearch(入库) -> Kibana展现

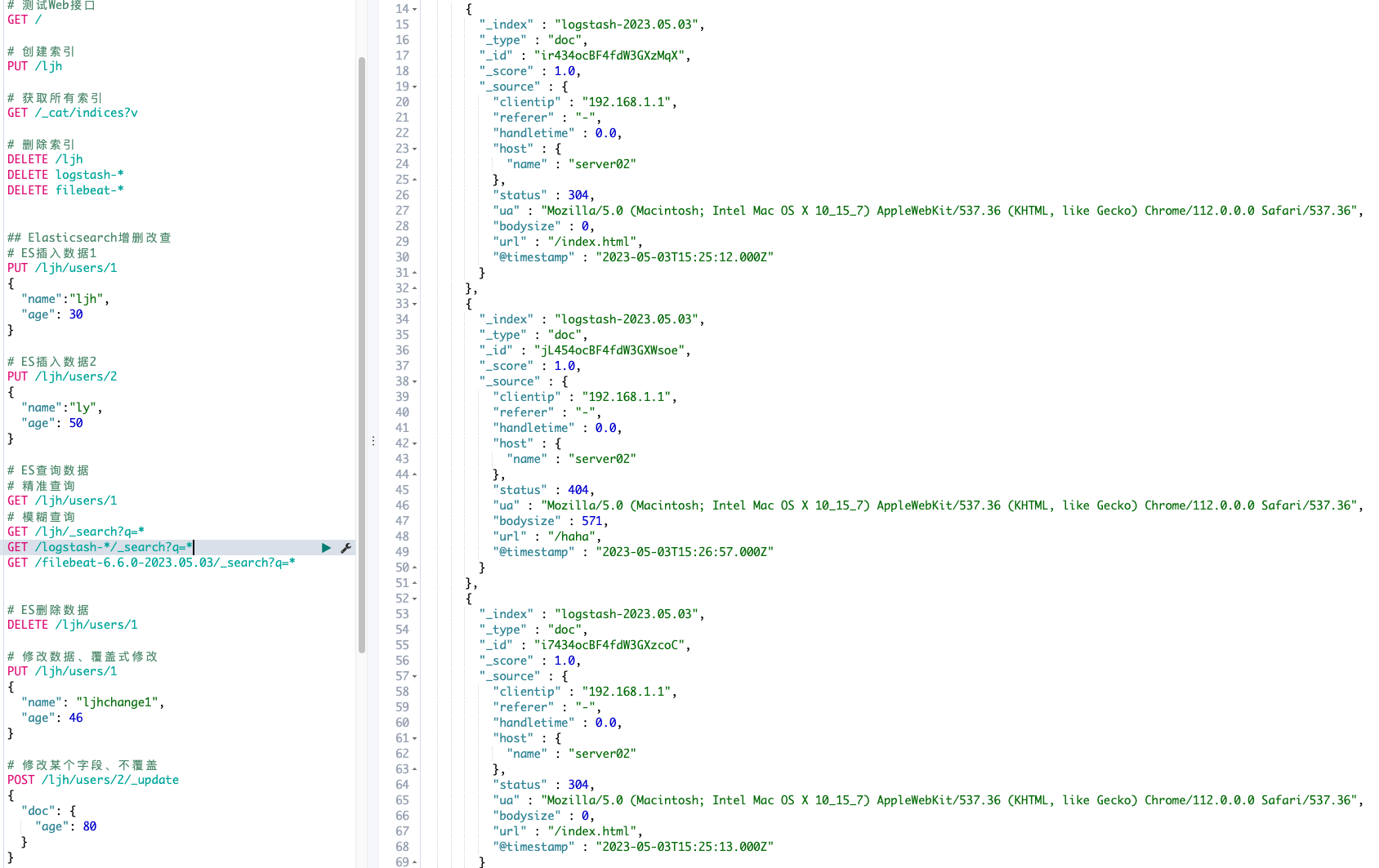

Json格式日志的采集

Nginx使用Json格式日志 /usr/local/nginx/conf/nginx.conf

log_format json '{"@timestamp":"$time_iso8601",'

'"clientip":"$remote_addr",'

'"status":$status,'

'"bodysize":$body_bytes_sent,'

'"referer":"$http_referer",'

'"ua":"$http_user_agent",'

'"handletime":$request_time,'

'"url":"$uri"}';

access_log logs/access.log;

access_log logs/access.json.log json;

/usr/local/nginx/sbin/nginx -s reload

Filebeat采集Json格式的日志 /usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

output:

logstash:

hosts: ["192.168.1.11:5044"]

重启:

nohup /usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml >/tmp/filebeat.log 2>&1 &

Logstash正则提取的配置备份 /usr/local/logstash-6.6.0/config/logstash.conf

filter {

grok {

match => {

"message" => '(?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+) (?<requesturl>[^ ]+) HTTP/\d.\d" (?<status>[0-9]+) (?<bodysize>[0-9]+) "[^"]+" "(?<ua>[^"]+)"'

}

remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

}

date {

match => ["requesttime", "dd/MMM/yyyy:HH:mm:ss Z"]

target => "@timestamp"

}

}Logstash解析Json日志 json source 字段可以自动覆盖timestamp

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

filter {

json { source => "message" remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"] }

}

output {

elasticsearch {

hosts => ["http://192.168.1.10:9200"]

}

}

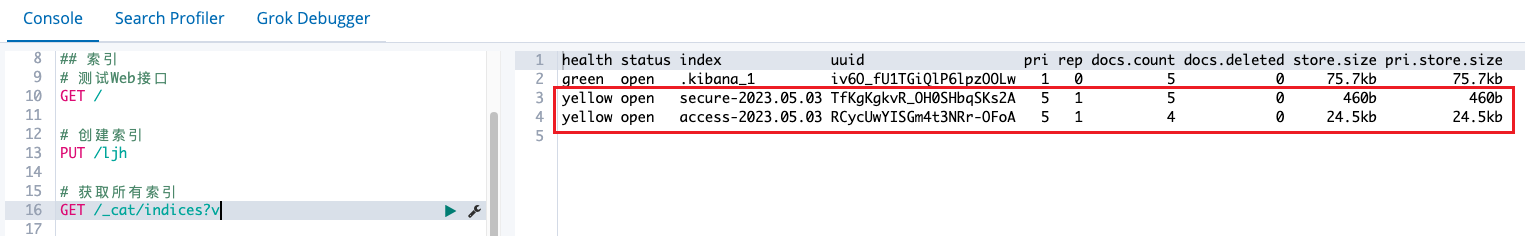

Filebeat采集多个日志

Filebeat采集多个日志配置 /usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

- type: log

tail_files: true

backoff: "1s"

paths:

- /var/log/secure

fields:

type: secure

fields_under_root: true

output:

logstash:

hosts: ["192.168.1.11:5044"]

Logstash通过type字段进行判断,并自定义索引进行区分 /usr/local/logstash-6.6.0/config/logstash.conf

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

filter {

if [type] == "access" {

json {

source => "message"

remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

}

}

}

output{

if [type] == "access" {

elasticsearch {

hosts => ["http://192.168.1.10:9200"]

index => "access-%{+YYYY.MM.dd}"

}

}

else if [type] == "secure" {

elasticsearch {

hosts => ["http://192.168.1.10:9200"]

index => "secure-%{+YYYY.MM.dd}"

}

}

}

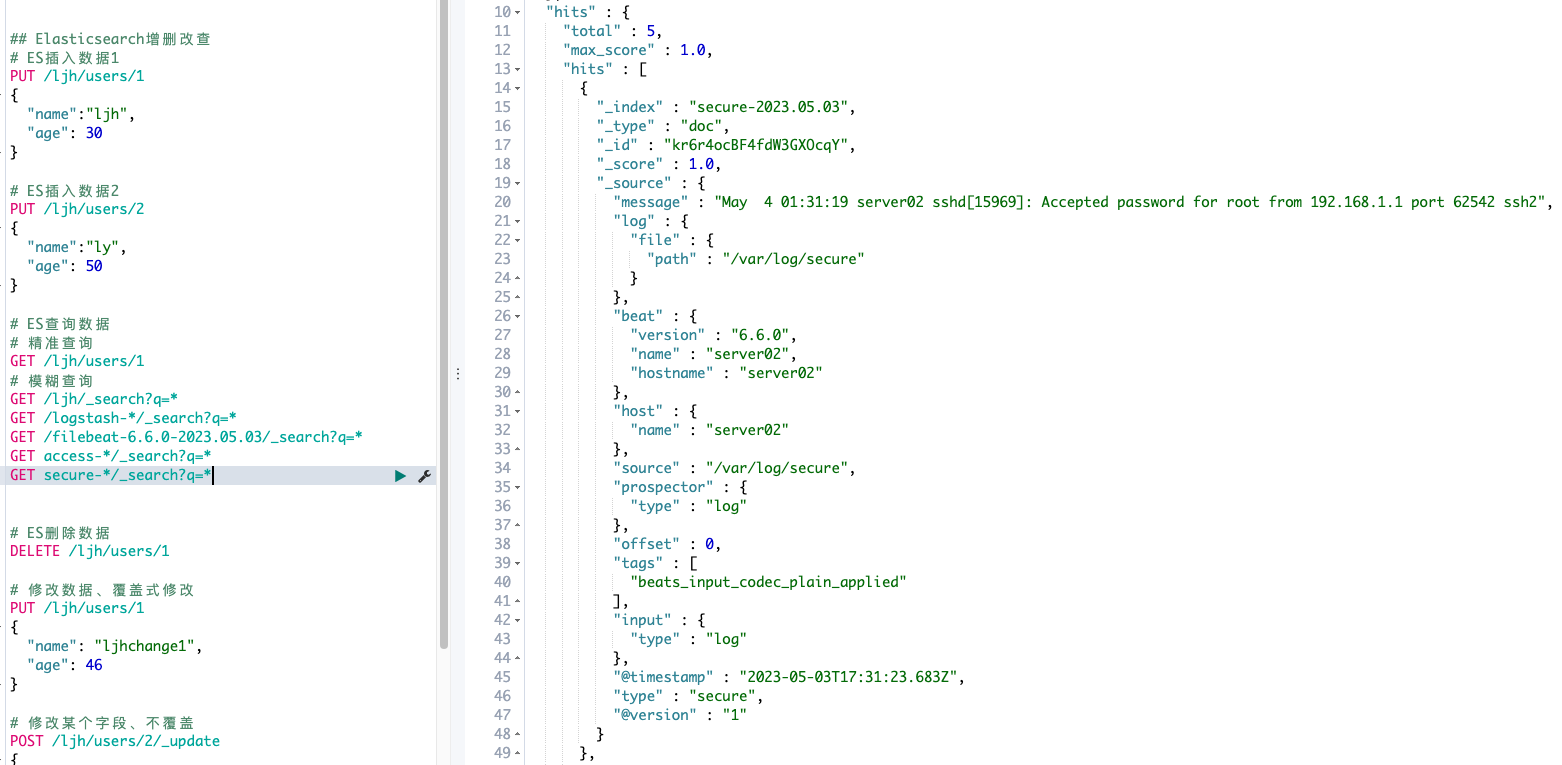

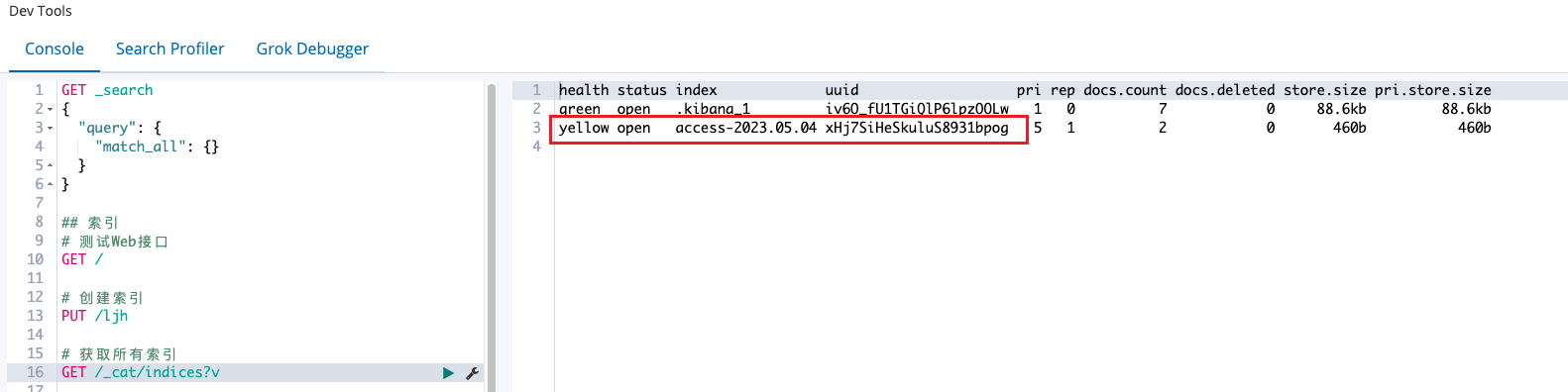

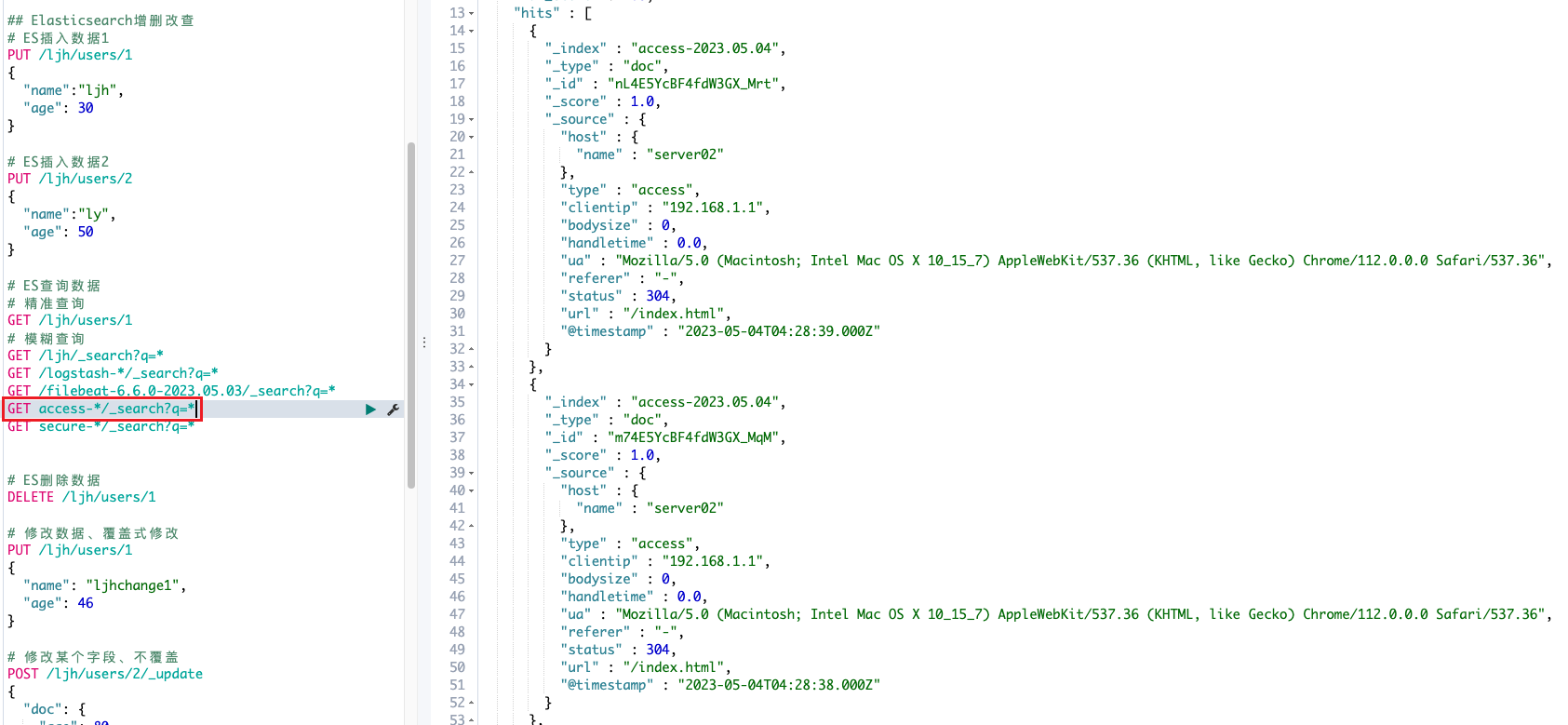

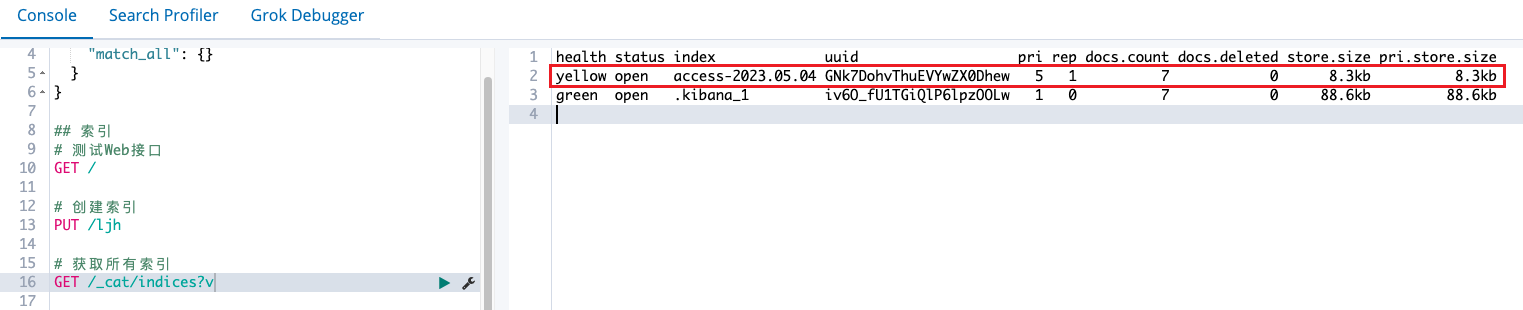

索引查看

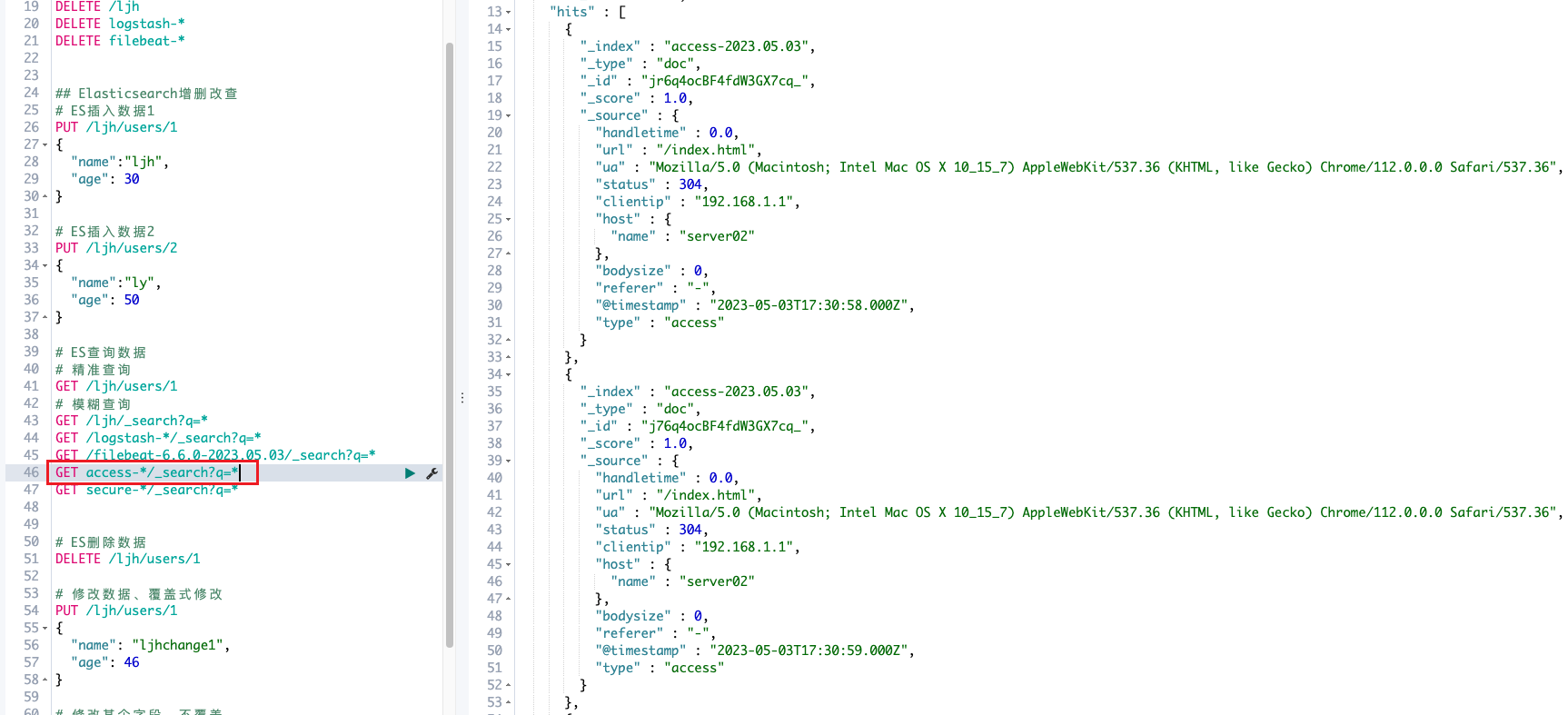

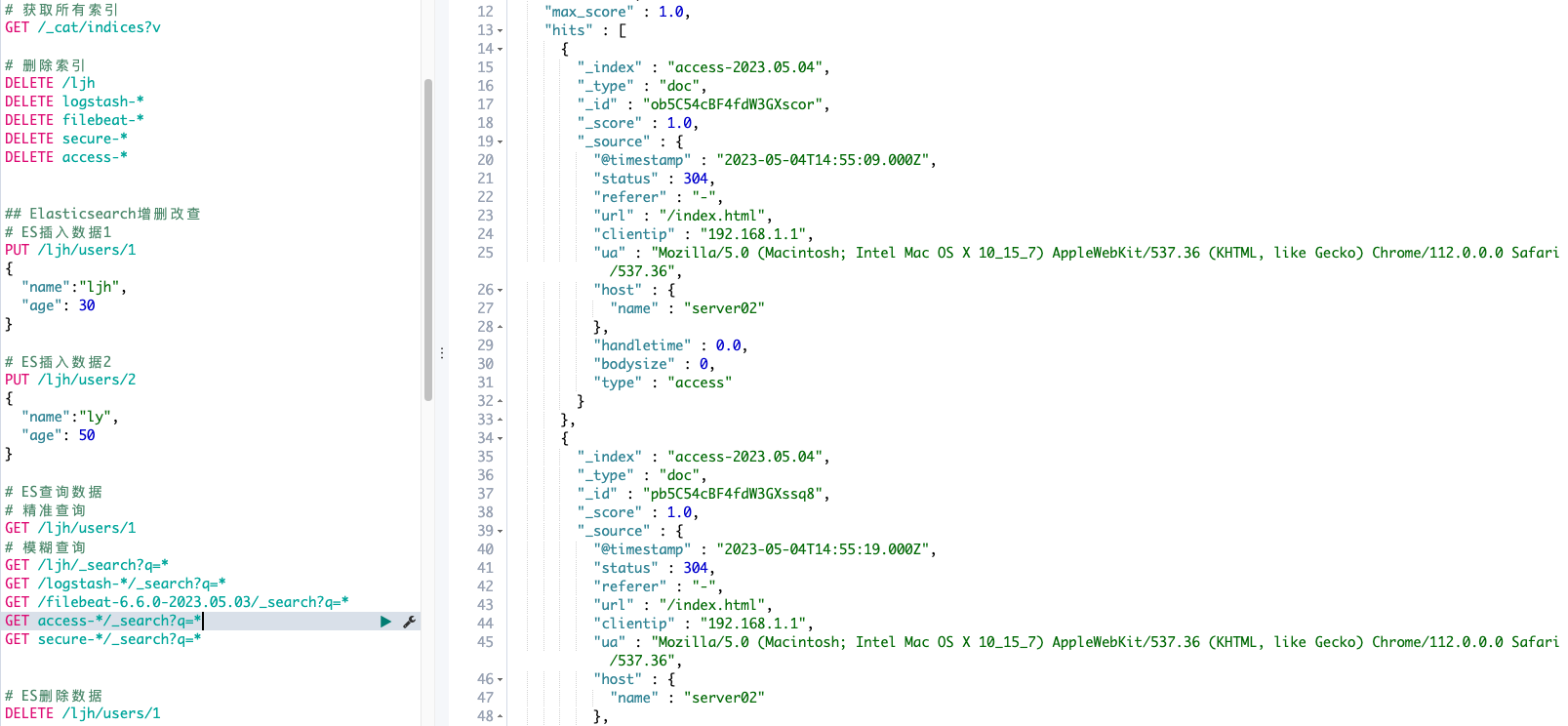

access日志

secure日志

Redis服务器的编译安装

之前架构

Filebeat(多台) -> Logstash(正则) -> Elasticsearch(入库) -> Kibana展现

我们使用消息队列,通过异步处理请求,从而缓解系统的压力。消息队列常应用于异步处理,流量削峰,应用解耦,消息通讯等场景

架构优化

Filebeat(多台) -> Redis、Kafka -> Logstash(正则) -> Elasticsearch(入库) -> Kibana展现

yum install -y wget net-tools gcc gcc-c++ make tar openssl openssl-devel cmake

wget 'http://download.redis.io/releases/redis-4.0.9.tar.gz'

mkdir -pv /usr/local/redis/conf /usr/local/redis/bin

cp src/redis* /usr/local/redis/bin/

cp redis.conf /usr/local/redis/conf

/usr/local/redis/bin/redis-server /usr/local/redis/conf/redis.conf

/usr/local/redis/bin/redis-cli

Filebeat和Logstash间引入Redis

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

output:

redis:

hosts: ["192.168.1.11"]

port: 6379

password: 'ljh'

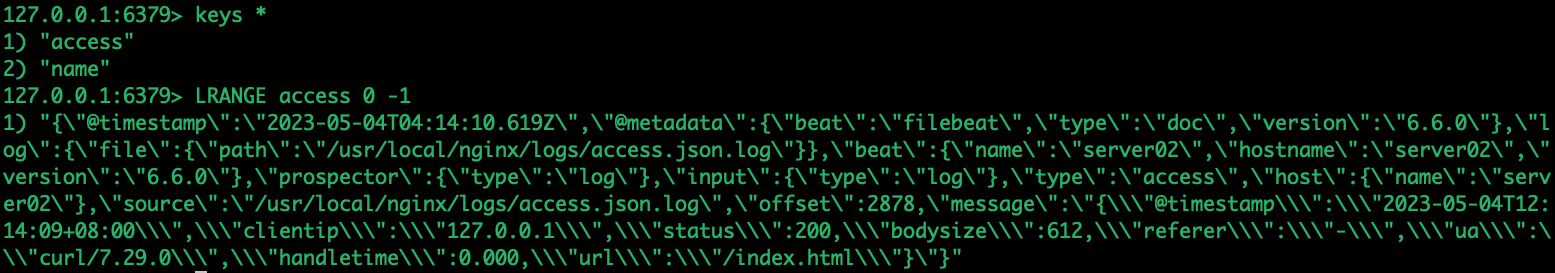

key: 'access'redis日志:

进入redis查看缓存数据

Logstash从Redis中读取数据 /usr/local/logstash-6.6.0/config/logstash.conf

input {

redis {

host => '192.168.1.11'

port => 6379

key => "access"

data_type => "list"

password => 'ljh'

}

}

kill $(ps aux | grep logstash | grep -v grep | awk '{print $2}' | head -1)

nohup /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf >/tmp/logstash.log 2>/tmp/logstash.log &

Filebeat(多台) -> Redis、Kafka -> Logstash(正则) -> Elasticsearch(入库) -> Kibana展现

Kafka服务器二进制安装

实战环境

192.168.1.11: Logstash、Kafka、Filebeat

cd /usr/local/src

tar -zxf zookeeper-3.4.13.tar.gz

mv zookeeper-3.4.13 /usr/local/

cp /usr/local/zookeeper-3.4.13/conf/zoo_sample.cfg /usr/local/zookeeper-3.4.13/conf/zoo.cfg

- 更改配置 /usr/local/zookeeper-3.4.13/conf/zoo.cfg:clientPortAddress=0.0.0.0

- 启动:/usr/local/zookeeper-3.4.13/bin/zkServer.sh start

mv kafka_2.11-2.1.1 /usr/local/kafka_2.11

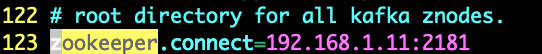

vim /usr/local/kafka_2.11/config/server.properties

前台启动:/usr/local/kafka_2.11/bin/kafka-server-start.sh /usr/local/kafka_2.11/config/server.properties

启动kafka:nohup /usr/local/kafka_2.11/bin/kafka-server-start.sh /usr/local/kafka_2.11/config/server.properties >/tmp/kafka.log 2>&1 &

Filebeat和Logstash间引入Kafka

Filebeat日志发送到Kafka:/usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

output:

kafka:

hosts: ["192.168.1.11:9092"]

topic: ljh

Logstash读取Kafka:/usr/local/logstash-6.6.0/config/logstash.conf

input {

kafka {

bootstrap_servers => "192.168.1.11:9092"

topics => ["ljh"]

group_id => "ljh"

codec => "json"

}

}

filter {

if [type] == "access" {

json {

source => "message"

remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

}

}

}

output{

if [type] == "access" {

elasticsearch {

hosts => ["http://192.168.1.10:9200"]

index => "access-%{+YYYY.MM.dd}"

}

}

}kibana观测结果:

也可以选择output可以先检测标准化输出

output {

stdout {

codec=>rubydebug

}

}

logstash日志

- 查看Group: ./kafka-consumer-groups.sh --bootstrap-server 192.168.1.11:9092 --list

- 查看队列:./kafka-consumer-groups.sh --bootstrap-server 192.168.1.11:9092 --group ljh --describe

当出现访问消息堆积时候,lag数量上升

发布者:LJH,转发请注明出处:https://www.ljh.cool/37120.html